Currently, there are many ways to deploy local Kubernetes quickly. The official documentation recommends using minikube for this. Canonical promotes its MicroK8s. There are also k0s, k3s, kind – in general, tools for every taste. But before we talk about Kubernetes let’s dive deep into history.

About 30 years ago, most software applications were huge monoliths. All components were interconnected and when it was necessary to change one of the elements, the developers had to re-upload the application. There were no distinctive boundaries between parts of the application which lead to the complexity of the system. Over time some parts of the app could become non-scalable, which made future manipulations with the app impossible. In addition, it took a lot of time to release monolithic applications and they were rarely updated.

Such monoliths still work today, but gradually they break up into small independent elements, which are called microservices. Each microservice can be developed, updated, deployed, and scaled individually. This helps to quickly make changes and keep the entire application up to date.

The system starts to grow as the quantity of components multiplies and it becomes more difficult to manage it. The most complex is to understand where to place the elements so that the infrastructure can work as efficiently as possible without additional cost of equipment. Manual processing appeared to long and hard, so it required automation.

This is how Kubernetes was born – a platform for the continuous automatic deployment of containerized applications. It simplified the work of developers, system administrators and formed the basis of the DevOps approach.

Developers can now deploy applications on their own, as often as needed, without even involving system administrators. Administrators no longer have to manually migrate applications if a crash occurs. All they need to do is control the Kubernetes platform, and the platform itself will take care of the applications.

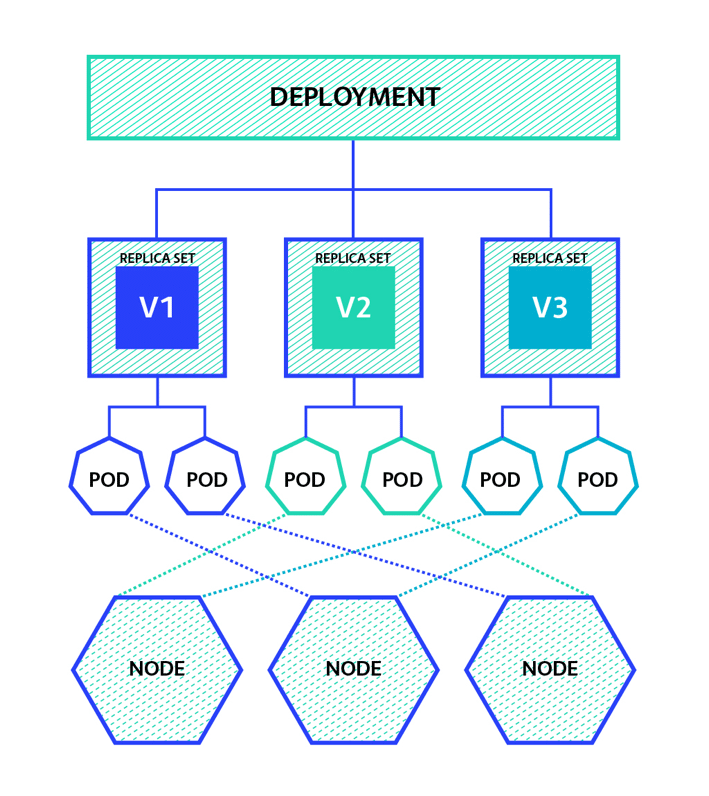

If the app needs deployment, k8s chooses a server for each element and deploys it. Each component interacts with other application components and is easy to find. There is no need to change anything in the application code.

How does Kubernetes work?

The large application consists of many containers that need management. Kubernetes brings order to the work of containers and automates management. This process is called “container orchestration”. Containers are a way to package code, system tools, libraries, and configurations. When the application is ready and tested, the developer packs it into a container, and transfers it to the server where it will work. With just one button, you can roll back the application to the original version, increase the capacity or update only one part of the application.

Benefits of Kubernetes for the business

Kubernetes technology is used both in BigData projects, such as Tinder applications, BlaBlaCar, and for processing small amounts of data. As technology advances, almost every startup considers it necessary to implement k8s.

There are three main benefits of Kubernetes:

– Workload scalability. The success of an application depends largely on performance and scalability. Kubernetes is a control system that allows you to scale the application and its infrastructure as the workload increases or reduce it as the load decreases;

– IT cost optimization. Automatic scaling of applications minimizes the risks of unclaimed IT infrastructure resources, costs become transparent, and their management efficiency increases;

– Efficiency of the output of products to the market. By dividing the overall process into separate tasks and flexibly managed teams, you can significantly reduce the time spent on development, and simplify the process of monitoring execution.

Kubernetes deployment

There are 2 main ways to deploy Kubernetes:

- Automated installation – OS automatically installed on a hard drive (MAAS, kickstart, Foreman etc)

- Live-deployment over the network – the pattern is deployed in a RAM (CoreOS, LTSP)

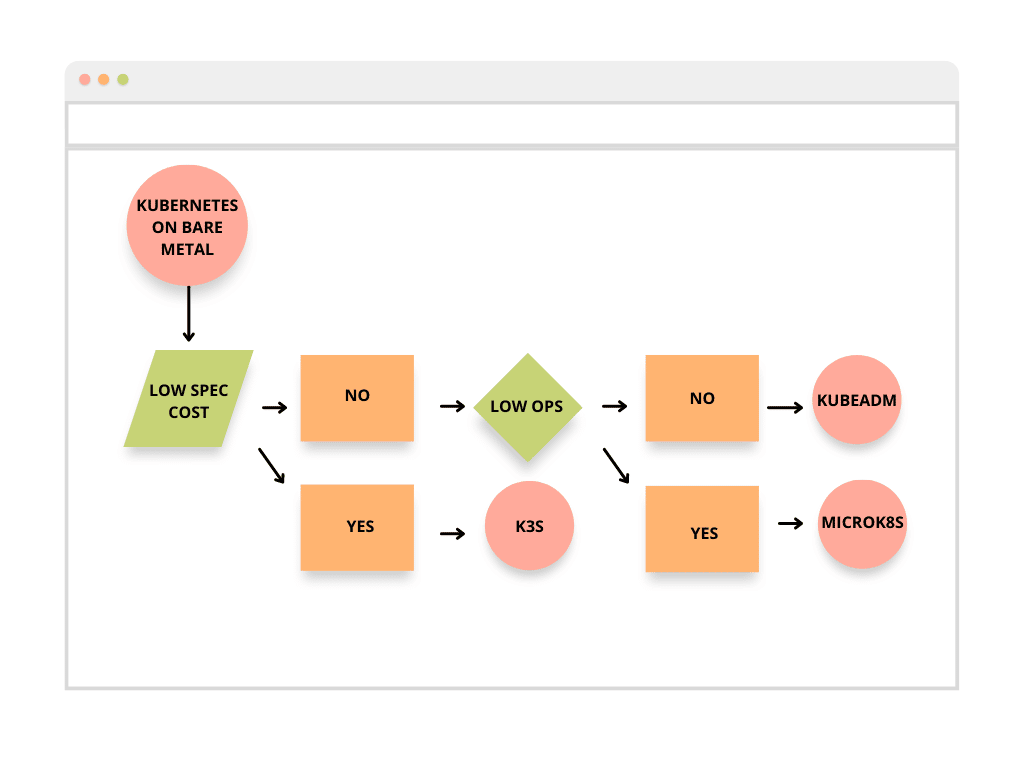

Container orchestration tools make software development easier by providing flexibility, device-to-device portability, good speed, and ease of scaling for distributed applications. Kubernetes instances today can be deployed on virtual machines, as well as in bare metal services. It is one of the most used container orchestration platforms, thus supported by many popular cloud service providers.

The services of various providers managing Kubernetes, guarantee an easy mechanism for deployment and start within the platform. Their work is usually based on virtualized infrastructure. The virtual machines are comfortable for providers and grant the good quality of work for their clients. But the interest of deployment Kubernetes on bare metal grows, as it gives you more unique advantages, as well as providing functions previously possible only for VMs.

First of all, using Kubernetes on the bare metal gives you more consistent and fast performance, which is unlikely for VMs due to the layer of abstraction between the application and hardware. VMs don’t access hardware directly, as it is abstracted from an underlying host environment. Also, some of the server sources can remain underused. Assigning a specific purpose to a server host, for example, allocating storage space to creating a VM disk image, makes it impossible to execute it in another way, even if the VM does not use all of its capacity.

Let’s specify the reasons to run a K8s cluster directly on bare metal.

Benefits of Kubernetes on bare metal

There are two major differences between Kubernetes on bare metal vs virtual machines. First of all, there is no such additional layer as a hypervisor and second of all, it’s a single-tenant environment. Those make all the crucial difference giving you the following advantages:

Better Performance and Simplicity

The absence of a hypervisor in the configuration system provides you with two major benefits: better performance and easier network setup. The missing virtualization layer also simplifies system management and troubleshooting. Due to fewer configurations, it is easier to automate and deploy software.

Kubernetes deployment with the bare metal has full access to all dedicated resources. Without a hypervisor, applications access directly to the CPU, RAM, and other hardware resources, which reduces latency and maximizes resource utilization. This is useful for applications sensitive to latency, such as safety, health, media, or financial apps. As well as for applications and workloads that require powerful hardware, for examples GPU-intensive applications for scientific and financial modeling, and memory-intensive database software.

More complex hardware and scalability

The absence of the hypervisor gives you the ability to use any kind of hardware, as some specific types are unable to virtualize. Using Kubernetes on the bare metal also gives you an opportunity to scale your application.

Horizontal scaling is one of the most prominent benefits of Kubernetes. Organizations that adopt the orchestration platform tend to grow faster using bare metal configurations that support scalability. For example servers 3rd Gen Intel Xeon with scalable CPUs provide tremendous built-in scalability, which simplifies infrastructure management.

Cost-effectiveness

Bare metal is customized to meet specific needs, so it can be optimized for higher workloads. Its configurations offer more power for the same cost as virtual machines analogs. VMs can still be good for small and medium-sized applications with predictable needs. But if your project is complex and requires more powerful hardware, you will find bare metal more cost-effective.

Also a hypervisor within VM is another layer to manage and maintain, which adds to the cost and performance.

Security and control

Single-tenancy provides more security. The administrator is in total control of the system, so the risk of cyberattacks is minimal. This makes Kubernetes deployment on bare metal perfect for applications that contain private or any other kind of information and require security.

A single-tenant environment also gives you total control over upgrades, patching, and underlying hardware. In VM you don’t supervise the underlying hypervisor and kernel, thus the tenant can’t control the patch levels or versions of the hypervisor and associated software.

Vendor independence

Deployment on VM makes you dependent on the facilities the vendor services can accommodate. As soon as you overgrow the abilities of the provider you will have to look for another option. The dependency on infrastructure may become a stumbling point for those organizations who tend to expand their app functionality. Deploying Kubernetes on the bare metal gives full control over hardware infrastructure, thus administrators won’t find themselves tied to vendors.

Which type of menat to us here?

- big “nodes” is better than “small”

- have to unify control (ILO, IPMY, CLY)

- 10G network is a must

- fast disks for etcd (nvme in the perfect world)

Wrap Up

Deploying Kubernetes to physical servers is recommended to organizations that are looking for new infrastructure management capabilities. If you need a help with Kubernets you can always reach to our experienced DevOps to get the right solution that fits your business goals.